|

|

#1 |

|

Участник

|

kurthatlevik: D365 Importing JSON data the hard way!

Источник: https://kurthatlevik.com/2020/04/13/...-the-hard-way/

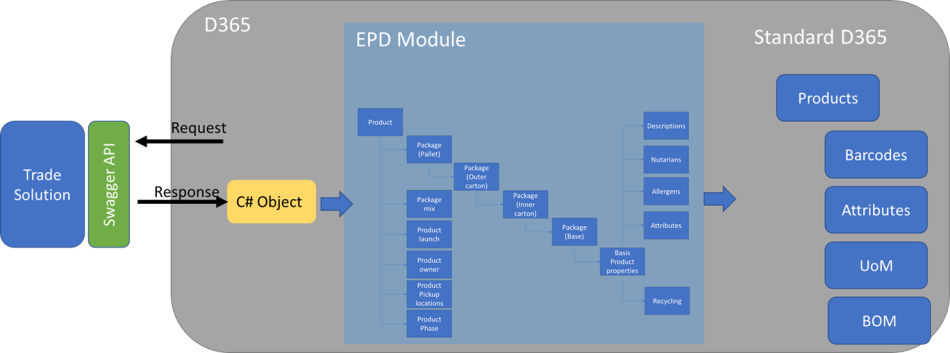

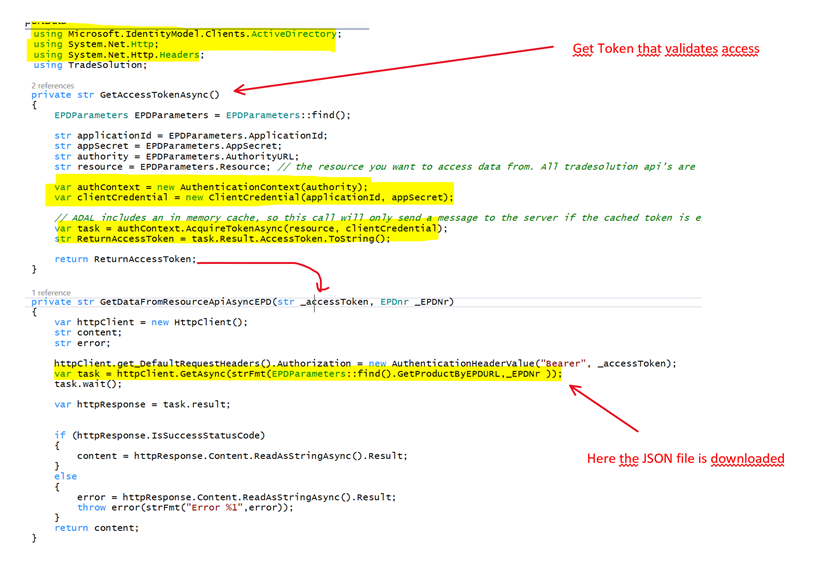

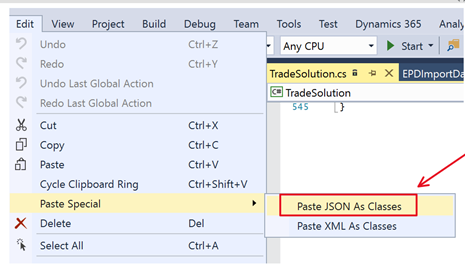

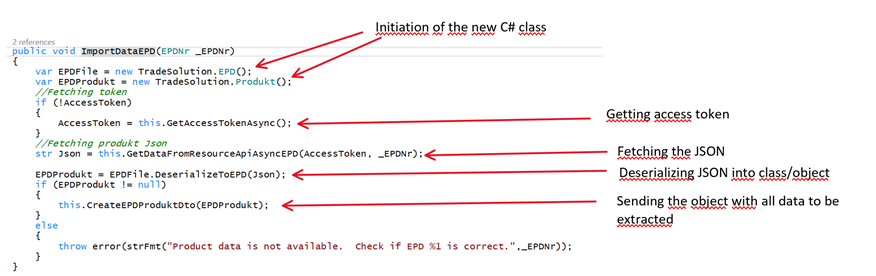

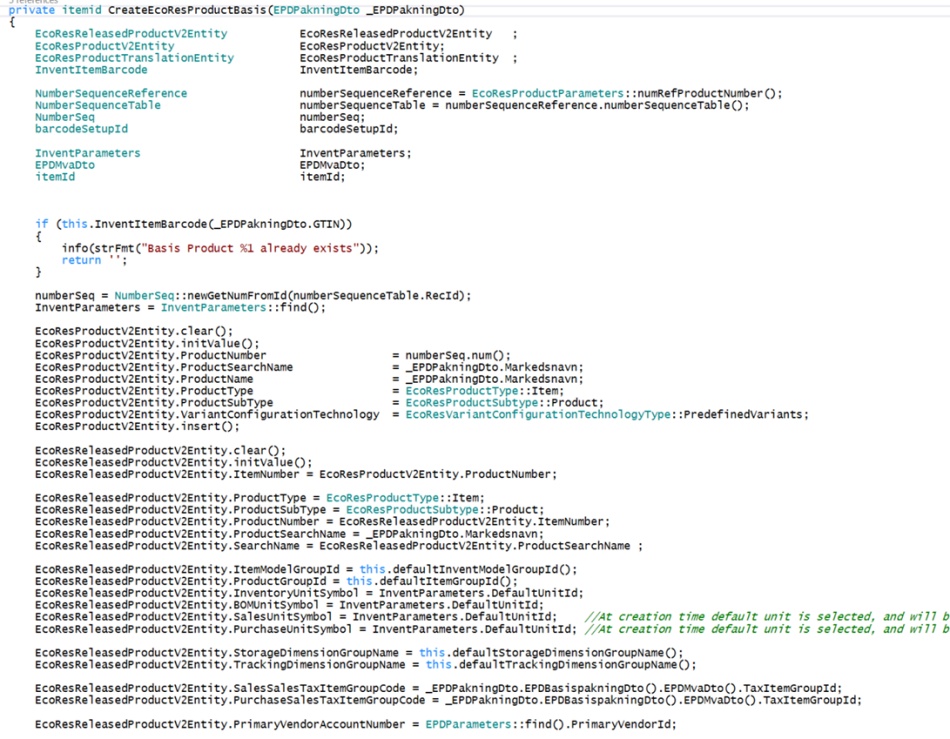

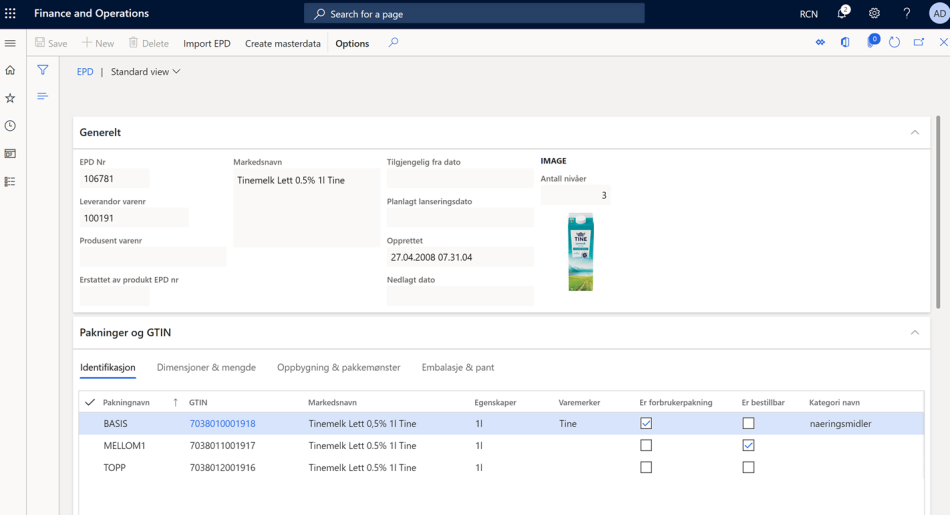

============== I recently created a solution where I’m importing products and all related data for the grocery industry, and I wanted to share my experience so that others may follow. This is not a “Copy-Paste” blogpost, but more show my approach to the process that can be used when working with more advanced and complex JSON integrations. Many industries have established vertical specific databases where producers, distributor’s and stores are cooperating and have established standards on product numbers, product naming, GTIN, Global Location Number (GLN) etc. In Norway we have several, and the most common for the grocery industry here is TradeSolution. Most products is available to the public at VetDuAt.no, but they also have a Swagger API where the JSON data can be fetched and imported to D365. One of the experiences I had when starting this journey, is that D365 is not modelled according how the data in these industry specific public databases. Much is different, and the data is often structed differently. We also see that the product databases are quite rich in terms of describing the products with physical dimensions, attributes, packing structure, allergens, nutrition’s etc. To give you a small figure of the complexity you often can find, here is a subset of the JSON hierarchy:  I needed to decide how I should import this data. Should I just import what I have fields for in D365? Should I extend D365 will lot’s and lot’s of new fields? Or should I model according to how the external database is presenting the data? I decided on the latter and import the data as it was presented. This would give the best result and the least information loss in the process. I decided to go for a model where D365 is requesting a JSON file from the Swagger API, and then placing the JSON structure in a C# class structure. Then extracting the data from the C# objects and place the data into a new module I named EPD. The next step the process does is to take these data and populate the standard 365.  The benefit I see is that I’m not overextending the std Microsoft code. The data is available in D365, and can be used in Power BI etc. I would like to share some of the basic steps when fetching such large data structures from external services. Fetch the JSON from the service. To fetch a JSON file, I’m using some .net references, that helps handle Active Directory and http connections. The first method shows how to get an accesstoken, and this is relevant of the swagger services requires this. The next method is where the swagger URL is queried, and the JSON file is returned. In additional some success/error handling.  So at this time we have the JSON file, and we want to do some meaningful with it. Visual studio have a wonderful feature, where you can paste a JSON, and convert it into classes. To make this work, you will have to create a C# project.  This will generate the C# class, and in this example the number of sub-objects and the number of properties is in the hundreds, and the properties can be objects and event array’s of objects.  In addition I need to have a method that takes that JSON file, and deserializes the content into the class methods.  Store the JSON object data into D365 tables. So at this time, we have been able to fetch the data, and in the following code, I’m getting accesstoken, getting the JSON, deserializing the it into an C#-object, and parsing it forward for more processing.  Now, let’s start inserting this data into a new D365 table. For simplicity reasons, I have created a D365 table for each data object in the JSON file. This allow me to store the entire hierarchical JSON structure into D365 tables for further processing. As soon as I have the data stored in D365, I can create the codes that moves it forward into the more functional tables in D365.  A lesson learn was that when creating sub tables to store hierarchical JSON data, it is sometimes needed to create relationship between the records in multiple tables. Sometimes also uniqueness is required, and the best way I have found (so-far) is to create a GUID field, and use this GUID to relate the data in the different tables. This can easily be accomplished with the following code.  Create the std D365 data using data entities through code. At this stage I have ALL the data in D365, and I can start processing the data. Here is a subsection of how I create released products by using standard data entities, where a table containing the JSON data is sent in, and I can create the products and all sub tables related to products.  This approach has resulted in solution, where it is easy for the end-user to fetch data from external systems, and import them into D365. Here is a form showing parts of the “staging” information before it is moved into D365 standard tables. (This form in in Norwegian, and showing a milk )  I would like to thank the community for all the inspired information found out there. Especially Martin Dráb (@goshoom) that have been very active in promoting the “Paste JSON as classes” in Visual studio. Источник: https://kurthatlevik.com/2020/04/13/...-the-hard-way/

__________________

Расскажите о новых и интересных блогах по Microsoft Dynamics, напишите личное сообщение администратору. |

|

|

| Теги |

| d365fo, json, интеграция |

|

|

|